Mental Health Detection

13.4. Mental Health Detection#

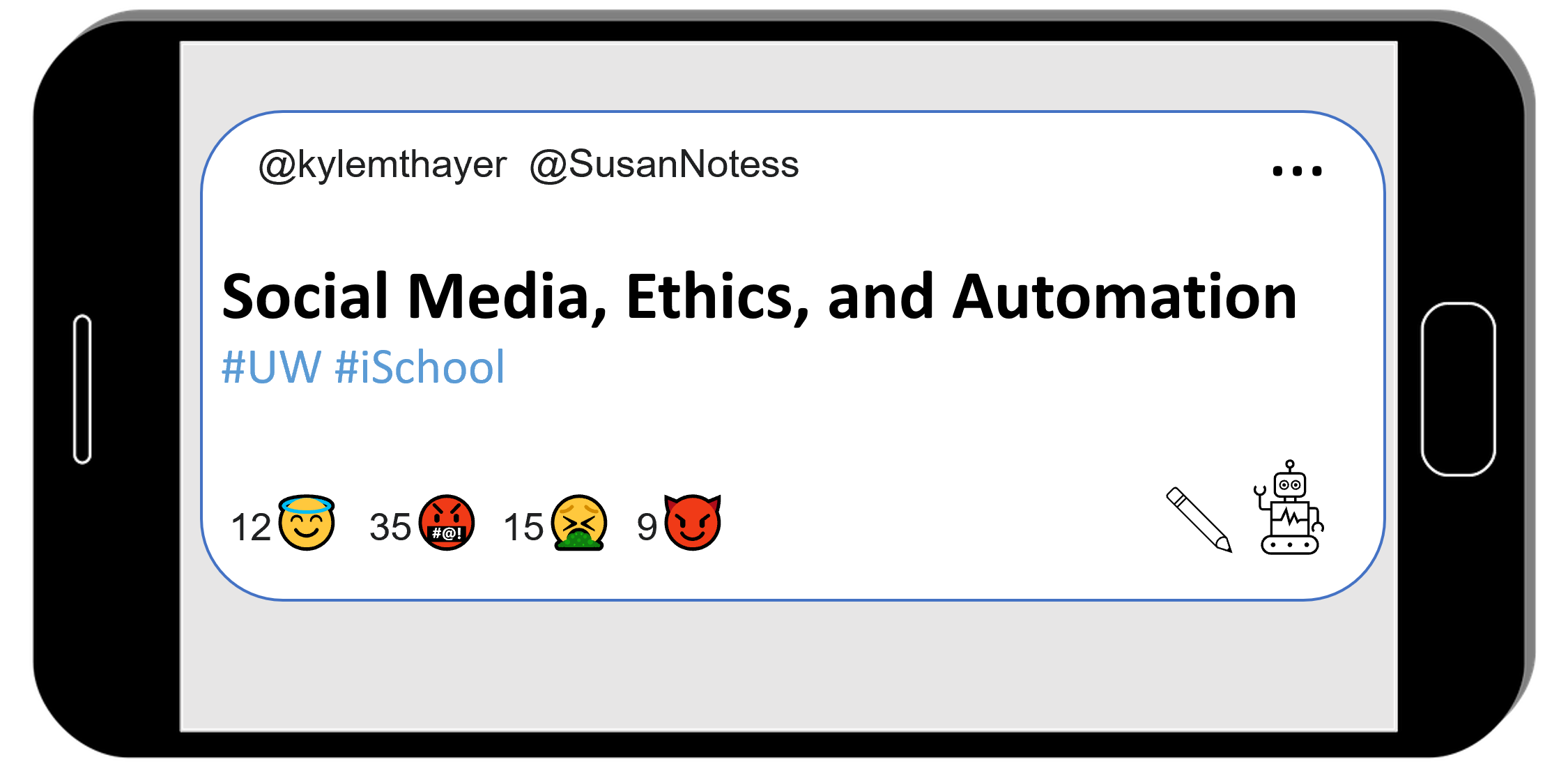

Since social media platforms can gather so much data on their users, they can try to use data mining to figure out information about their users’ moods, mental health problems, or neurotypes (e.g., ADHD, Autism).

For example, Facebook has a suicide detection algorithm, where they try to intervene if they think a user is suicidal (Inside Facebook’s suicide algorithm: Here’s how the company uses artificial intelligence to predict your mental state from your posts). As social media companies have tried to detect talk of suicide and sometimes remove content that mentions it, users have found ways of getting around this by inventing new word uses, like “unalive.”

Larger efforts at trying to determine emotions or mental health through things like social media use, or iPhone or iWatch use, have had very questionable results, and any claims of being able to detect emotions reliably are probably false.

Additionally, these attempts at detecting mental health can be part of violating privacy or can be used for unethical surveillance, such as:

your employer might detect that you are unhappy, and consider firing you since they think you might not be fully committed to the job

someone might build a system that tries to detect who is Autistic, and then force them into an abusive therapy system to try and “cure” them of their Autism (see also this more scientific explanation of that linked article)