8.7. Data Poisoning#

People working with data sets always have to deal with problems in their data, stemming from things like mistyped data entries, missing data, and the general problem of all data being a simplification of reality.

Sometimes a dataset has so many problems that it is effectively poisoned or not feasible to work with.

8.7.1. Unintentional Data Poisoning#

Datasets can be poisoned unintentionally. For example, many scientists posted online surveys that people can get paid to take. Getting useful results depended on a wide range of people taking them. But when one TikToker’s video about taking them went viral, the surveys got filled out with mostly one narrow demographic, preventing many of the datasets from being used as intended.

See more in

A teenager on TikTok disrupted thousands of scientific studies with a single video – The Verge [h21]

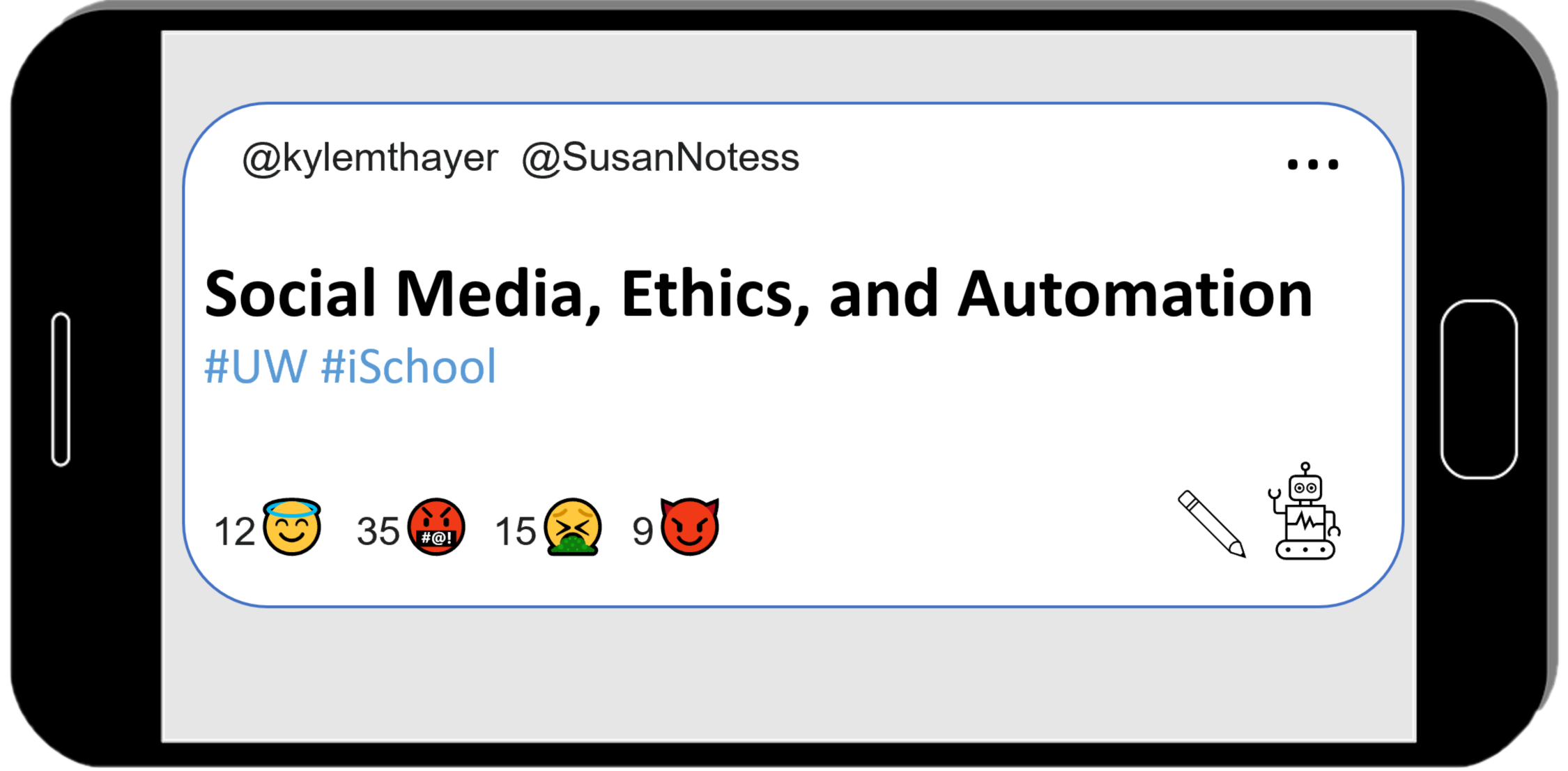

Additionally, spam and output from Large Language Models like ChatGPT can flood information spaces (e.g., email, Wikipedia) with nonsense, useless, or false content, making them hard to use or useless.

See more in

8.7.2. Intentional Data Poisoning#

Data can be poisoned intentionally as well. For example, in 2021, workers at Kellogg’s were upset at their working conditions, so they agreed to go on strike, and not work until Kellogg’s agreed to improve their work conditions. Kellogg’s announced that they would hire new workers to replace the striking workers:

Kellogg’s proposed pay and benefits cuts while forcing workers to work severe overtime as long as 16-hour-days for seven days a week. Some workers stayed on the job for months without a single day off. The company refuses to meet the union’s proposals for better pay, hours, and benefits, so they went on strike.

Earlier this week, the company announced it would permanently replace 1,400 striking workers.

People in the antiwork subreddit [h25] found the website where Kellogg’s posted their job listing to replace the workers. So those Redditors suggested they spam the site with fake applications, poisoning the job application data, so Kellogg’s wouldn’t be able to figure out which applications were legitimate or not (we could consider this a form of trolling). Then Kellogg’s wouldn’t be able to replace the striking workers, and they would have to agree to better working conditions.

Then Sean Black, a programmer on TikTok saw this and decided to contribute by creating a bot that would automatically log in and fill out applications with random user info, increasing the rate at which he (and others who used his code) could spam the Kellogg’s job applications:

@seandablack Shortucut soon perhaps #antiwork #workersrights #kelloggs #strike #unions #leftist ♬ original sound - Sean Black

See also: